Chatbots and AI assistants: Essential tools for improving customer service, or high-risk technologies prone to errors that could damage customer trust and create new liabilities?

As generative AI becomes more embedded in customer service, it's essential to understand both the legal and practical risks of rolling out a customer-facing chatbot - and how to mitigate them. Using our new content on global AI laws and regulation, we look at what organisations need to know.

What are the risks?

In 2024, New York City launched an AI chatbot to provide local businesses with actionable and trusted answers to their queries. Instead, it gave misleading advice which was not only factually incorrect but which – if followed – would have led to clearly unlawful activity.

That same year, we also saw the now infamous Canadian case of Moffatt v. Air Canada, which found that a business can be held directly liable for incorrect or misleading information given to individuals by a chatbot (the airline’s online chatbot had mistakenly confirmed a passenger’s right to a discounted airfare).

There are numerous other real-life examples, but the key takeaway is that – at least without appropriate safeguards - risks raised by this relatively nascent technology (whether embarrassing hallucinations or the creation of direct liability by the chatbot’s output) can cause material reputational or financial harm and attract regulatory scrutiny.

How can you mitigate those risks?

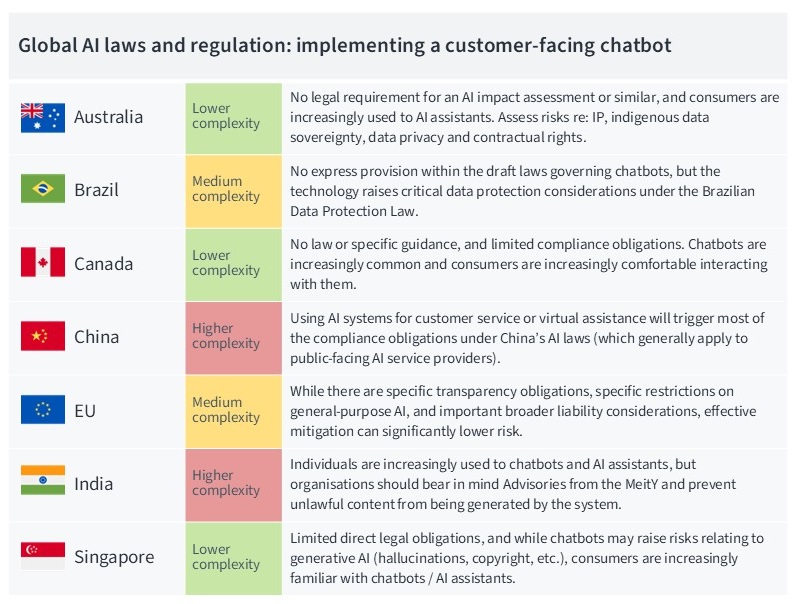

The first step is staying informed about the evolving legal and regulatory position around the world as there is a wide selection of ever-changing regulatory guidance, compliance frameworks and market practice.

To help organisations navigate this complexity, Rulefinder Data Privacy’s AI Regulation & Governance module pulls together analysis and insights from lawyers across the globe on a number of practical AI scenarios, including the use of chatbots.

Here's a preview:

Beyond chatbots: embedding AI into global compliance frameworks

It is also necessary to consider the broader AI regulation and governance frameworks in place around the world. This can help to ensure that all areas of compliance risk have been adequately addressed, through reference to underlying laws or regulatory guidance.

Our AI Regulation and Governance module allows you to benchmark key global AI frameworks and compare obligations (such as transparency, human oversight, fairness and bias mitigation) around the world.

Practical steps to reduce risk

To mitigate risks, organisations should take a range of practical, operational steps, including:

- Prioritising accuracy and transparency: these are fundamental if a user is to trust the output of a chatbot, and at the same time understand its limitations

- Ensuring data integrity: avoid the garbage in, garbage out trap by training chatbots on accurate, trustworthy data, free from any sort of corruption

- Using trusted sources or trusted internal documentation (which may be policies, competition rules, or even internal documents) when training chatbots, to give them the strongest foundation for providing accurate answers

- Using disclaimers and other transparency wording: to clarify the nature of the output, its reliability or enforceability (e.g. by stating any need for human validation)

- Implementing guardrails: limit chatbot responses on specific high-risk words, topics or questions

- Adding contractual protections: e.g. in situations where the chatbot is developed or provided by a third party, covering issues such as the allocation of liability for its output

- Assessing other legal risks: consider issues specific to your scenario such as intellectual property, data privacy or applicable consumer protection legislation

Explore our AI Regulation & Governance module

This new content module keeps you up to speed with:

- Global AI laws, guidance, regulators, and enforcement updates

- Key governance requirements - from transparency to AI safety

- Practical use cases - from chatbots to recruitment tools

It's part of Rulefinder Data Privacy and only available to subscribers.